Fairness in ML

§1. Overview

Automatic algorithms, in particular supervised classification and unsupervised ranking algorithms, are increasingly being used to aid decision making. There are growing concerns that the classification or the ranking model used may exhibit various biases against certain groups of individuals. For example, some algorithmic decisions are shown to have bias against African Americans in predicting recidivism, in NYPD stop-and-frisk decisions, and in granting loans. Similarly, some are shown to have bias against women in job screening and in online advertising. These algorithmic decisions can be biased primarily because the training data these algorithms rely on may be biased, often due to the way the training data are collected. Due to the severe societal impacts of biased decisions, various research communities are investing significant efforts in the general area of fairness — two out of five best papers in the premier ML conference ICML 2018 are on algorithmic fairness, the best paper in the premier database conference SIGMOD 2019 is also on fairness, and even a new conference ACM FAccT (previously FAT*) dedicated to the topic has been started since 2017.

§2. Our Angle: a Systems Focus on Fairness

While significant progress has been made in various aspects of the general area of algorithmic fairness, we identify three major reasons that hinderdata scientists and practitioners from incorporating fairness constraints into their everyday decision making.

- Lack of a versatile and easy-to-use system. Many of the existing algorithmic fairness work, which are mostly on supervised classification tasks, are usually piecemeal solutions that are only applicable under specific assumptions — some are only applicable to specific fairness constraints (e.g., preprocessing techniques only work on statistical parity), some require modifications to ML training algorithms (e.g., in-processing techniques), and they offer various degrees of fairness-accuracy trade-offs. For regular ML application developers without deep expertise on the fairness subject, it is difficult for them to incorporate fairness considerations into their development cycles.

- Lack of fairness work for unsupervised decision making. Majority of the existing algorithmic work focus on supervised ML classification models for decision making, partially because classifiers are more widely used. However, there are many practical scenarios, where decisions are made or aided by an unsupervised ranking model (e.g., employee promotion and faculty hiring). Compared with fair classification, there is a shortage of work in incorporating fairness considerations into these unsupervised ranking analytics, let alone doing so in a user friendly fashion.

- Lack of guidance in constraint specification. It is a myth that data scientists know exactly which fairness constraints are applicable for a given application or what bias a given model exhibits. For example, in the UC Berkeley graduate school admission case in 1973, the perceived bias against female applicants can be explained away and justified, as females tend to apply for departments with lower overall acceptance rates. If users were to enforce statistical parity constraint on the application, reverse discrimination against male would have been incurred, which is just as undesirable.

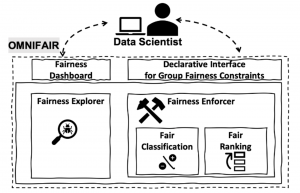

§3. Our System: OmniFair

Motivated by these observations, we propose to build a system named OmniFair with the goal of allowing practitioners to comfortably adopt fairness constraints into their applications. At a high level, we want to (i) make fairness enforcement transparent for data scientists: allow them to enforce the fairness constraints into their models in a declarative manner, without needing to change their algorithms; (ii) enable fairness exploration: help them audit their model and understand its limitations, choose proper fairness constraints for their application, and find bias justifications.

§4. Publications

- OmniFair: A Declarative System for Model-Agnostic Group Fairness in Machine Learning

Hantian Zhang, Xu Chu, Abolfazl Asudeh, Sham Navathe

SIGMOD 2021

§5. People

- Xu Chu (faculty lead)

- Hantian Zhang (student lead)

- Abolfazl Asudeh (collaborator)

- Sham Navathe (collaborator)